Ivan Sosnovik

I am a Principal AI Research Scientist at the Autodesk AI Lab, based in the UK. My primary research interests lie in generative AI for engineering, 3D modeling, and solving general physics problems.

Previously, at the AWS GenAI Innovation Center, I focused on large language models (LLMs) and their applications in document processing, code completion, chemical engineering, and beyond.

I completed my PhD at Delta Lab, University of Amsterdam, under the supervision of Arnold Smeulders. I received my MSc from Phystech and Skoltech, where I explored the intersection of neural networks and topology optimization under the supervision of Ivan Oseledets. My bachelor's degree, also from Phystech, was in experimental low-temperature physics.

Email | CV | Google Scholar | GitHub | Twitter

Publications

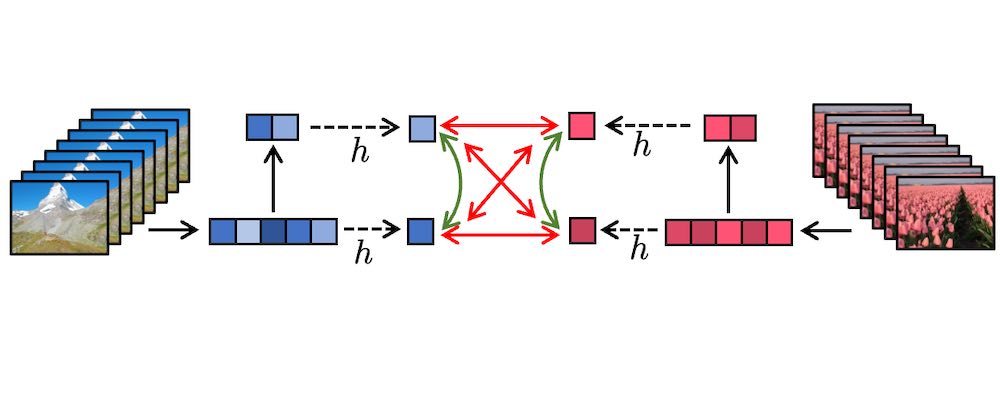

Learning to Summarize Videos by Contrasting Clips

Preprint, 2023

In this paper, we formulate video summarization as a contrastive learning problem. We implement the main building blocks which allows one to convert any video analysis model into an effective video summarizer.

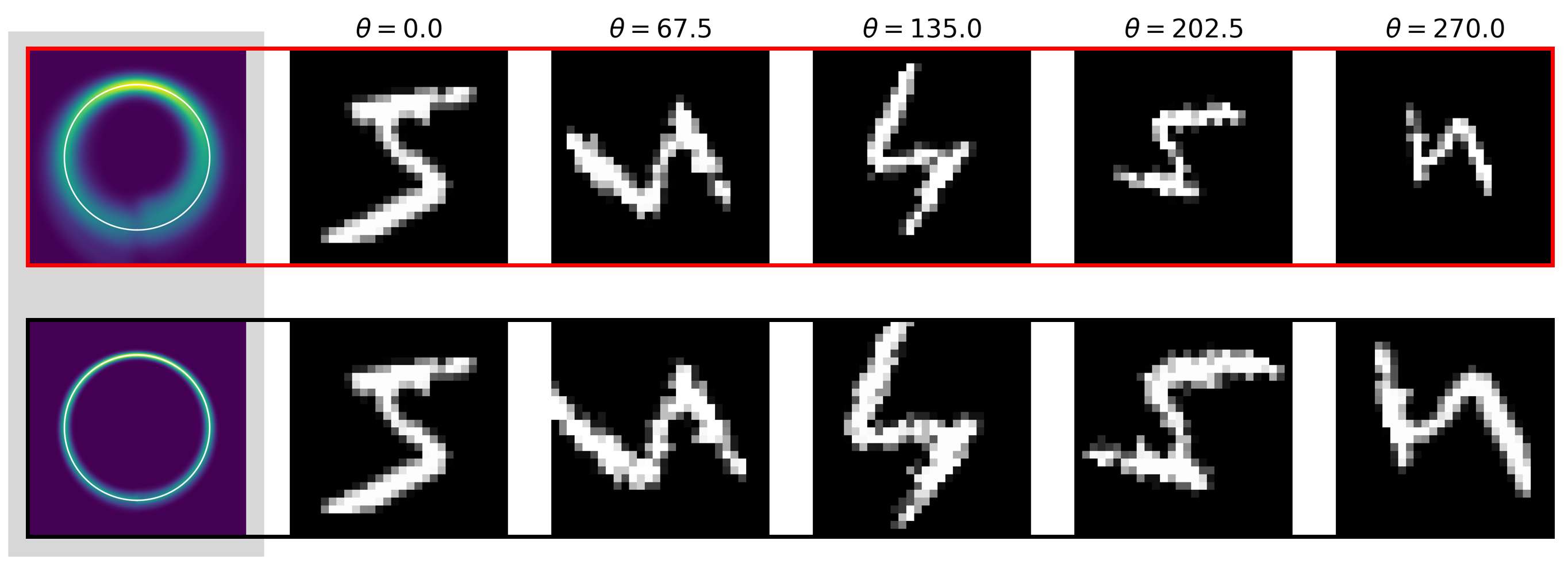

LieGG: Studying Learned Lie Group Generators

NeurIPS, 2022

We present LieGG, a method to extract symmetries learned by neural networks and to evaluate the degree to which a network is invariant to them. With LieGG, one can explicitly retrieve learned invariances in a form of Lie-group generators.

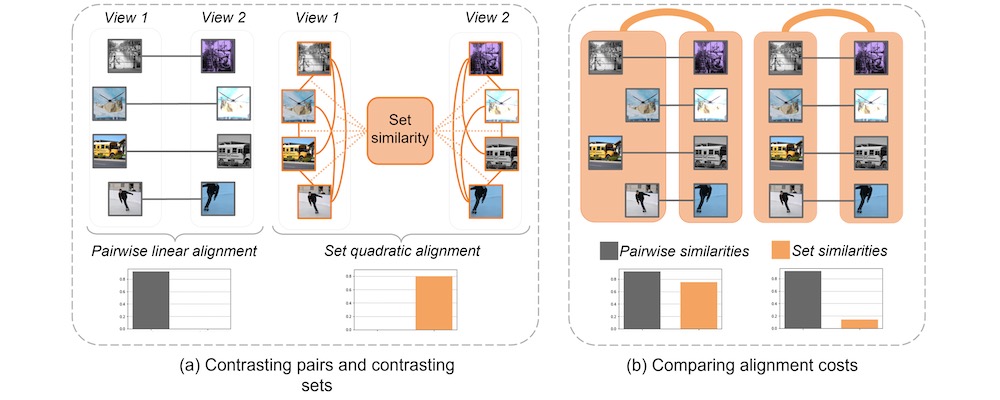

Contrasting quadratic assignments for set-based representation learning

ECCV, 2022

We go beyond contrasting individual pairs of objects by focusing on contrasting objects as sets. We use combinatorial quadratic assignment theory and derive set-contrastive objective as a regularizer for contrastive learning methods.

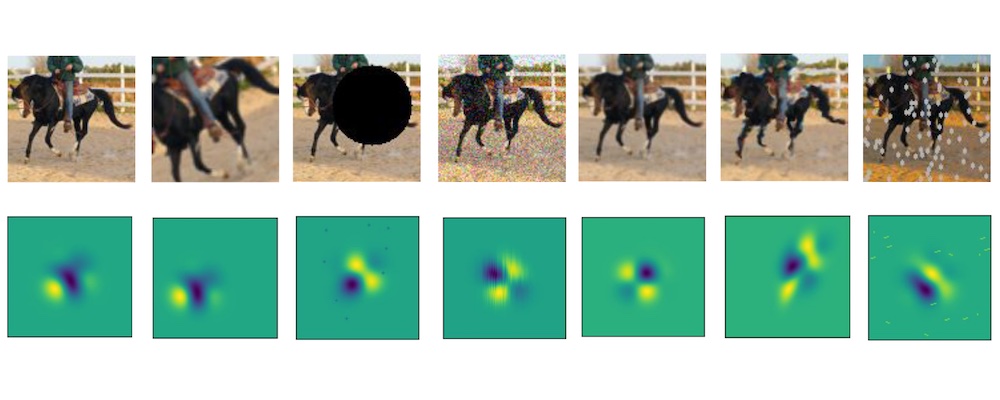

Wiggling Weights to Improve the Robustness of Classifiers

Preprint, 2021

While many approaches for robustness train the network by providing augmented data to the network, we aim to integrate perturbations in the network architecture to achieve improved and more general robustness.

DISCO: accurate Discrete Scale Convolution (Best Paper Award!!!)

BMVC, Oral, 2021

We develop a better class of discrete scale equivariant CNNs, which are more accurate and faster than all previous methods. As a result of accurate scale analysis, they allow for a biased scene geometry estimation almost for free.

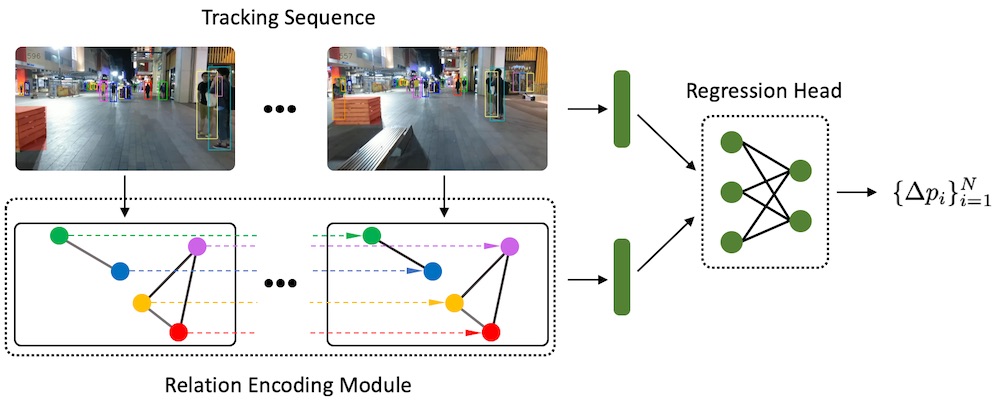

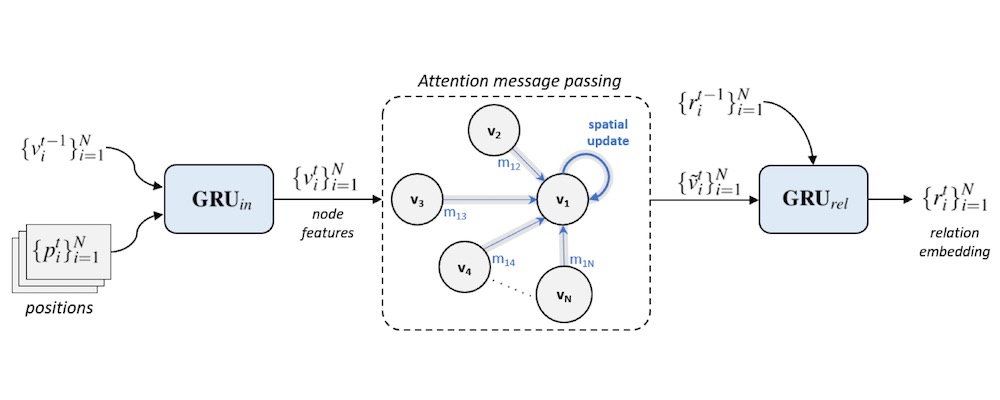

Relational Prior for Multi-Object Tracking

ICCV VIPriors Workshop, Oral, 2021

Tracking multiple objects individually differs from tracking groups of related objects. We propose a plug-in Relation Encoding Module which encodes relations between tracked objects to improve multi-object tracking.

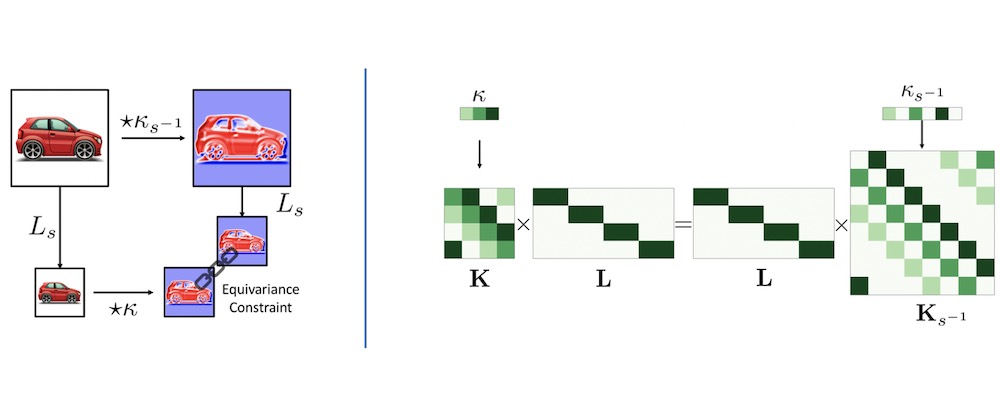

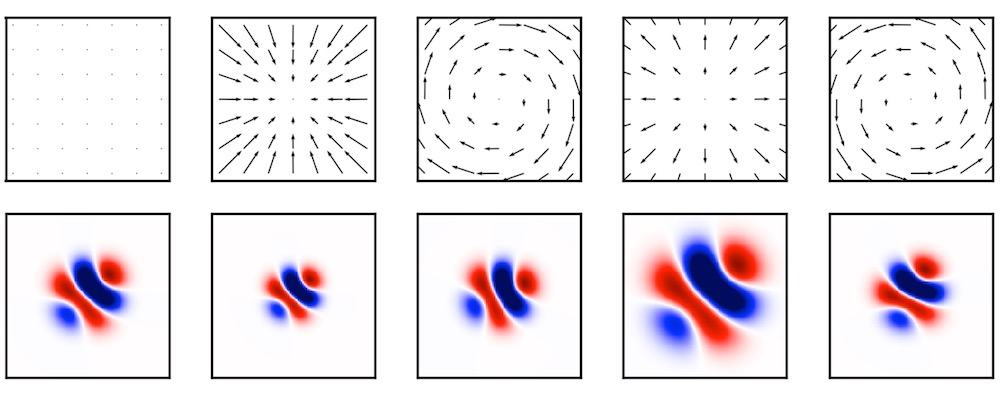

How to Transform Kernels for Scale-Convolutions

ICCV VIPriors Workshop, 2021

To reach accurate scale equivariance, we derive general constraints under which scale-convolution remains equivariant to discrete rescaling. We find the exact solution for all cases where it exists, and compute the approximation for the rest.

Two is a Crowd: Tracking Relations in Videos

Preprint, 2021

We propose a plug-in Relation Encoding Module to learn a spatio-temporal graph of correspondences between objects within and between frames. The proposed module allows for tracking even fully occluded objects by utilizing relational cues.

Built-in Elastic Transformations for Improved Robustness

Preprint, 2021

We present elastically-augmented convolutions (EAConv) by parameterizing filters as a combination of fixed elastically-perturbed bases functions and trainable weights for the purpose of integrating unseen viewpoints in CNNs.

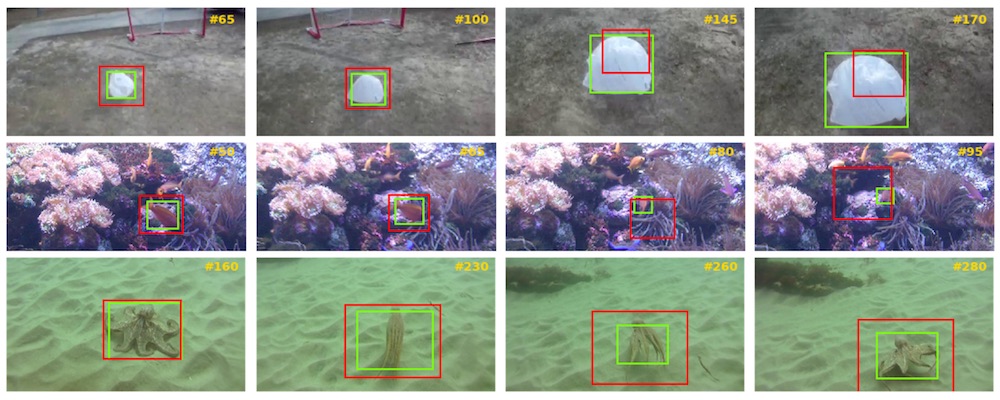

Scale Equivariance Improves Siamese Tracking

WACV, 2021

In this paper, we develop the theory for scale-equivariant Siamese trackers. We also provide a simple recipe for how to make a wide range of existing trackers scale-equivariant to capture the natural variations of the target a priori.

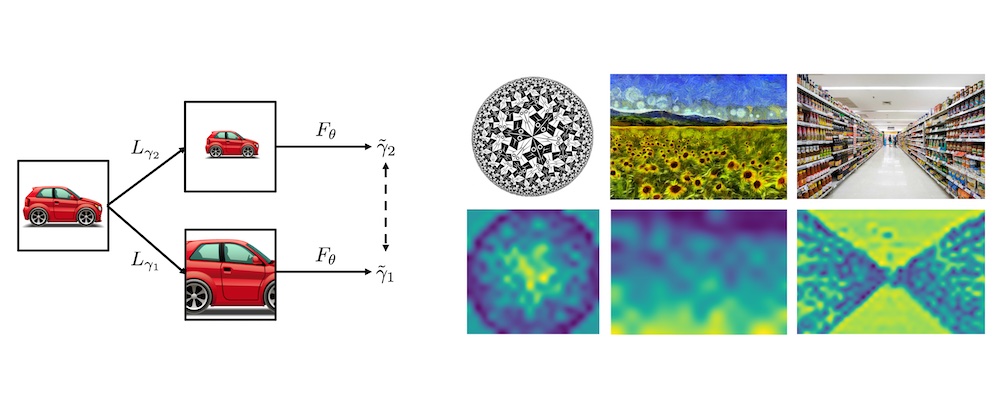

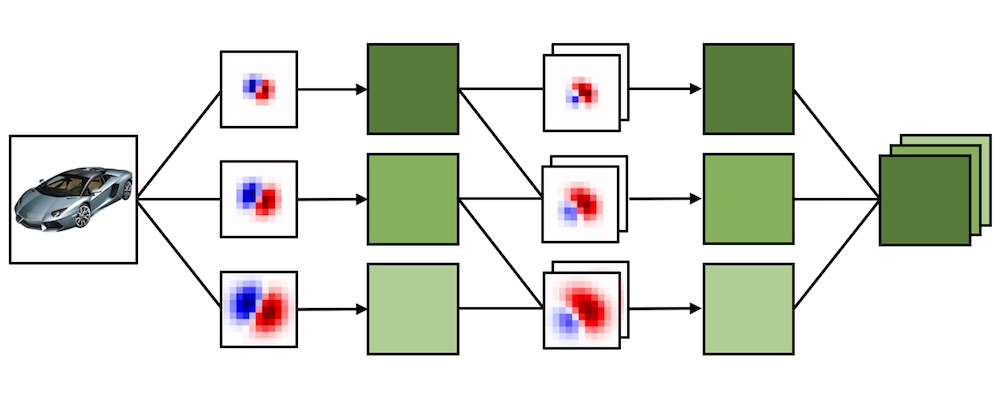

Scale-Equivariant Steerable Networks

ICLR, 2020

We introduce the general theory for building scale-equivariant convolutional networks with steerable filters. We develop scale-convolution and generalize other common blocks to be scale-equivariant.

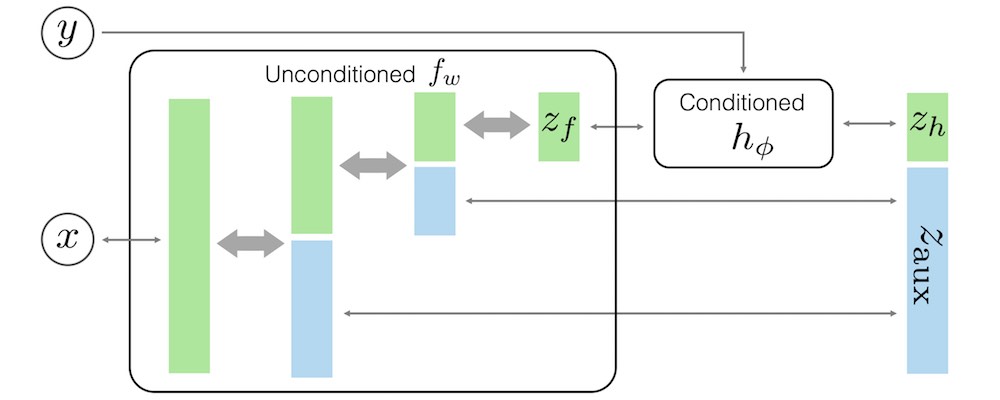

Semi-Conditional Normalizing Flows for Semi-Supervised Learning

ICML INNF, 2019

This paper proposes a semi-conditional normalizing flow model for semi-supervised learning. The model uses both labelled and unlabeled data to learn an explicit model of joint distribution over objects and labels.

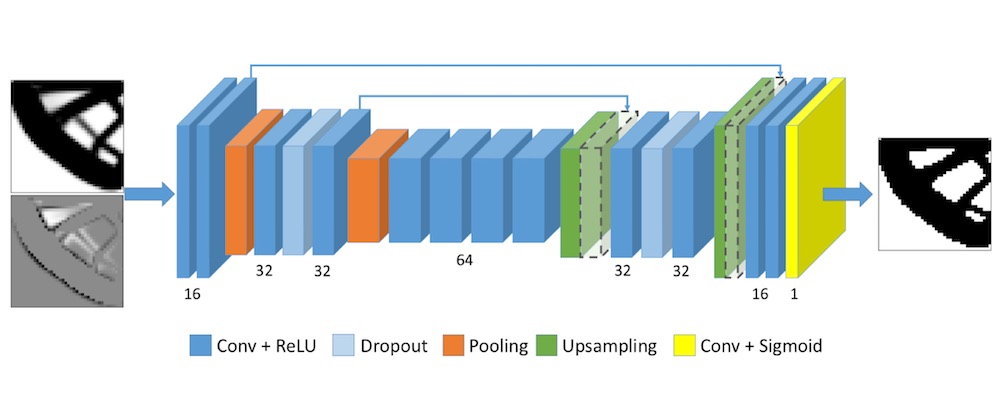

Neural Networks for Topology Optimization

Russian Journal of Numerical Analysis and Mathematical Modelling 34 (4), 2019

In this research, we propose a deep learning based approach for speeding up the topology optimization methods. We formulate the problem as image segmentation and leverage the power of deep learning to perform pixel-wise image labeling.

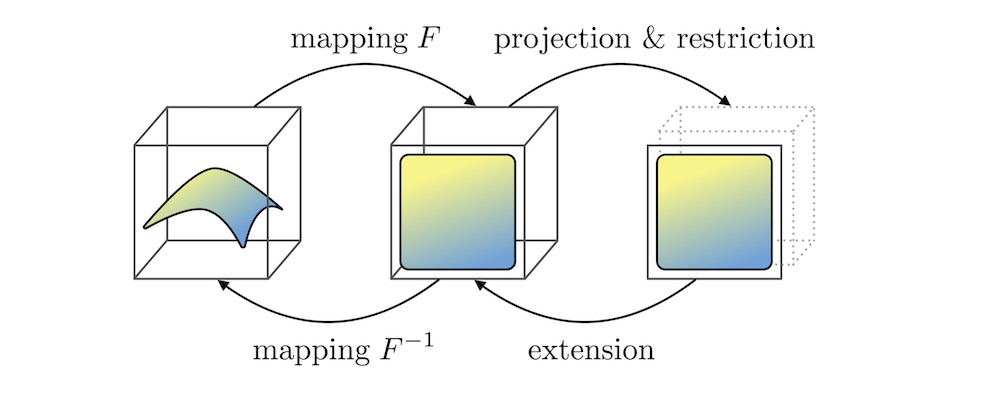

PIE: Pseudo-Invertible Encoder

Preprint, 2018

We introduce a new class of likelihood-based autoencoders with pseudo bijective architecture, which we call Pseudo Invertible Encoders. We provide the theoretical explanation of their principles.

Media

- Artem Moskalev and I gave a talk on "Scale-Equivariant CNNs for Computer Vision" at Computer Vision Talks. You can watch it here.

- Artem Moskalev and I gave an interview about DISCO.

Open Source Projects

- AutoMark — a lightweight tool for testing programming assignments written in Python. It was used a lot for automating assessment of homework assignments at "Applied Machine Learning" at the UvA.

- ToPy — a lightweight topology optimization framework for Python that can solve compliance (stiffness), mechanism synthesis and heat conduction problems in 2D and 3D.

Teaching

- Applied Machine Learning, University of Amsterdam, 2017 – 2020

- iOS Game Development, Skolkovo Institute of Science and Technology, 2016

Students

- Cees Kaandorp: Video summarization using contrastive learning

- Lucas Meijer: Color capsules

- Dario E. Shehni Abbaszadeh: Learning affine capsules by contrasting coordinates

- Dave Meijdam: Maximizing generalization of spatial data to minimize payload and retain visual representation

- Jonne Goedhart: Application of autoencoders to the analysis of Raman hyperspectral imagery

- Daan Ferdinandusse: Transformation-equivariant models

- Gongze Cao: On free-form normalizing flows

- Michał Szmaja: Scale-equivariant convolutional neural networks

- Jan Jetze Beitler: Orthogonal weight parametrization for dimensionality reduction in neural networks

Reviewing

- ICLR 2024

- ICLR 2023

- TPAMI 2022

- ICML INNF+ 2021

- ICCV 2021

- CVPR 2018, 2021

- ICLR 2021

- WACV 2021

- Journal of Mathematical Imaging and Vision

- Computer Vision and Image Understanding

- Engineering Optimization

- Computer Methods in Applied Mechanics and Engineering

- The Visual Computer